Creating clients for IAM, EC2, and Redshift cluster.

Move Uber receipts from your local computer to S3.Create the required S3 buckets( uber-tracking-expenses-bucket-s3, airflow-runs-receipts).Upload the file AWS-IAC-IAM-EC2-S3-Redshift.ipynb, and use it into your colab local env:.Go to -> Connect -> “Connect to local runtime” -> Paste the url copied from the last step and put it in Backend URL -> connect.After the execution of the last command, copy the localhost URL, you will need it for colab.Setup local environment with Google Colab:Ĭ:\>jupyter notebook -NotebookApp.allow_origin='' -port=8888 -NotebookApp.port_retries=0.Go to this url: AWS CLIand configure your AWS Credentials in your local machine.Second, create a new User in AWS with AdministratorAccess and get your security credentials.

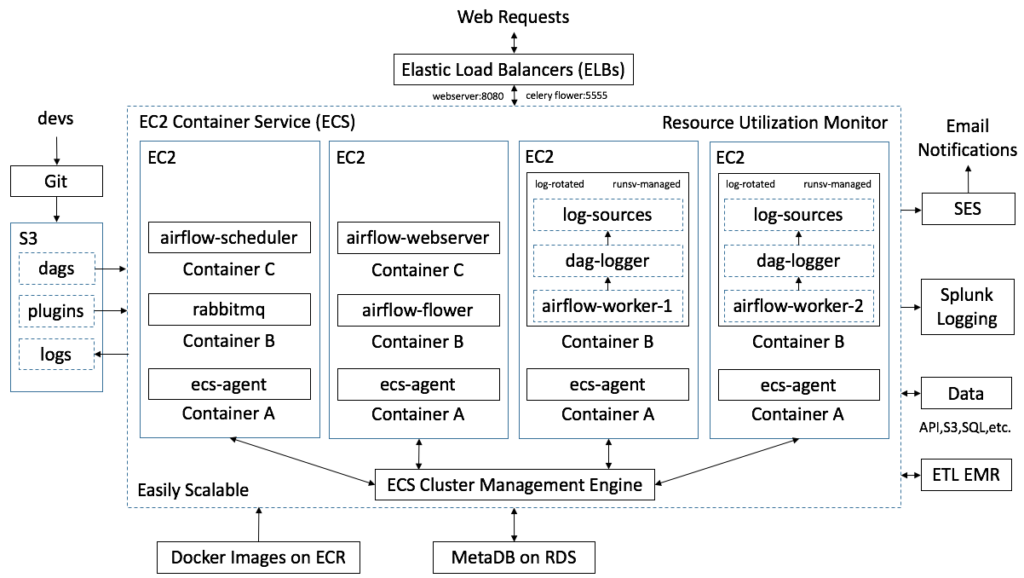

AIRFLOW ETL INSTALL

Install git-bash for windows, once installed, open git bash and download this repository, this will download the dags folder and the docker-compose.yaml file, and other files MINGW64 /c $ git clone.Below we list the different steps and the things carried out in this file: In addition to preparing the infrastructure, the file AWS-IAC-IAM-EC2-S3-Redshift.ipynb will help you to have an alternative staging zone in S3 as well. The aim of this section is to create a Redshift cluster on AWS and keep it available for use by the airflow DAG. It will be our original data sources, in my case, I already downloaded all those receipts from my email to my local computer. eml take a look at the image below, both receipts belong to the details sent by Uber about Eats and Rides services. Data sourcesĮvery time an Uber Eats or Uber Rides service has ended, you will receive a payment receipt to your email, this receipt contains information with details of the service, is attached to the email with the extension.

Bring a drink, make yourself comfortable and keep reading this article, I will show you a quick, funny, and easy way to automate everything step by step. Have you ever thought about how much money you spend on these services? The goal of this article or project is to track the Uber Rides expenses and Uber Eats expenses through a data engineering process using technologies such as Apache Airflow, AWS Redshift and Microsoft Power BI, this article will make a brief description of the data sources involved and the data model suitable for a reporting strategy. ? Both phrases belong to the emblematic company with disruptive technology, UBER, which is evolving the way the world moves with two strong businesses with millionaire revenue, Uber Rides and Uber Eats, both businesses are now part of our daily lives. Have you heard phrases like Hungry? You’re in the right place or Request a trip, hop in, and relax.

0 kommentar(er)

0 kommentar(er)